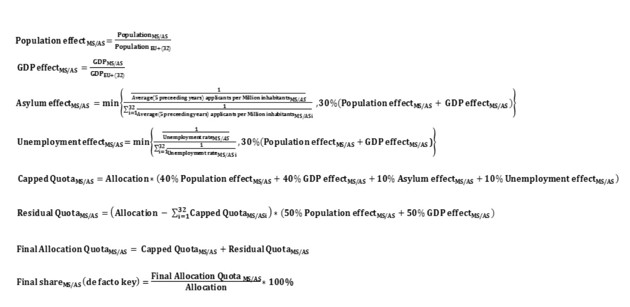

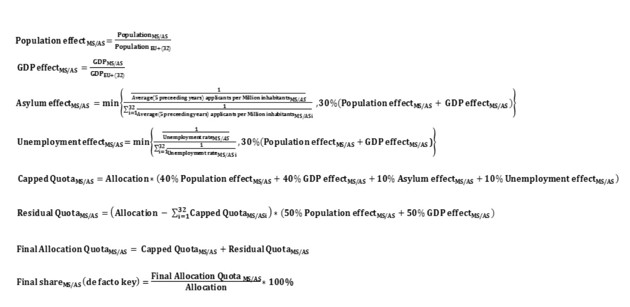

How will refugees be dispersed among European countries? This formula:

On Wednesday, shortly after European Commission President Jean-Claude Juncker announced a new plan to distribute 120,000 asylum-seekers currently in Greece, Hungary, and Italy among the EU’s 28 member states, Duncan Robinson of the Financial Times tweeted a series of grainy equations from the annex of a proposed European regulation, which establishes a mechanism for relocating asylum-seekers during emergency situations beyond today’s acute crisis. Robinson’s message: “So, how do they decide how many refugees each country should receive? ‘Well, it’s very simple…’”

In an FAQ posted on Wednesday, the European Commission expanded on the thinking behind the elaborate math. Under the proposed plan, if the Commission determines at some point in the future that there is a refugee crisis in a given country (as there is today in Greece, Hungary, and Italy, the countries migrants reach first upon arriving in Europe), it will set a number for how many refugees in that country should be relocated throughout the EU. That number will be “not higher than 40% of the number of [asylum] applications made [in that country] in the past six months.”…

What’s most striking to me is the contrast between the sigmas and subscripts in the refugee formula—the inhumanity of technocratic compromise by mathematical equation—and the raw, tragic, heroic humanity on display in recent coverage of the refugees from Syria, Afghanistan, Eritrea, and elsewhere who are pouring into Europe.

The writer hints at the end here that the bureaucratic formula and stories of human lives at stake are incompatible. How could we translate people who need help into cold, impersonal numbers? This is a common claim: statistics take away human stories and dignity. They are unfeeling. They can’t sum the experiences of individuals. One online quote sums this up: “Statistics are human beings with the tears wiped off.”

Yet, we need both the stories and the numbers to truly address the situation. Individual stories are important and interesting. Tragic cases tend to draw people’s attention, particularly if presented in attractive ways. But, it is difficult to convey all the stories of the refugees and migrants. Where would they be told and who would sit through them all? The statistics and formulas help give us the big picture. Just how many refugees are there? (Imagine a situation where there are only 10 refugees but with very compelling stories. Would this compel nations to act.) How can they be slotted into existing countries and systems?

On top of that, you can’t really have the nations of today without bureaucracies. We might not like that they are slow moving or inefficient at times or can be overwhelming. How can you run a major social system without a bureaucratic structure? Would we like to go to a hospital that was not a bureaucracy? How do you keep millions of citizens in a country moving in a similar direction? Decentralization or non-hierarchical systems can only go so far in addressing major tasks.

With that said, the formula looks complicated but the explanation in the text is fairly easy to understand: there are a set of weighted factors that dictate how many refugees will be assigned to each country.